The International Conference on Machine Learning (ICML) was in NYC this year! Unfortunately(?) for me, I moved from NYC to Zürich two months ago. Fortunately for me, I was able to return to attend the conference. Instead of doing a day-by-day breakdown (as I did for NIPS and AAAI), this post will be arranged thematically. Let's see how I deal with the hard group assignment problem... Skip to the bit you care about.

Caveats:

- I missed some non-trivial fraction of ICML due to finishing my poster, helping collaborators with a grant application, and coming down with illness

- Future conference goal: finish my poster before I travel.

- Also don't try to print A0 posters in the USA. It ain't pretty.

- I took very patchy notes, haven't read all the papers deeply.

Volunteering at ICML

I was a student volunteer for ICML, which consisted of working two ~five-hour shifts at the conference. For me these were both Registration Desk. I had 07.30-12.30 on the first and last days, which was possible purely by my being in European time for much of the trip. I woke up at 4am on the first day. Here are some observations:

- people actually register on the last day, but more people just want to get their badge reprinted

- protip: don't forget your name badge!

- you paid hundreds of dollars to get that piece of paper

- some people turn up really early to register

- 90% of ICML attendees were DeepMind employees

- registration desk workers could easily be replaced by name-badge-printing kiosks

- conference attendees expect a pile of swag upon registration: pens and bags and mugs and programs booklets. Not receiving these items is cause for thinly-veiled indignation

- queues for registration are worst in the gap between sessions, naturally

- people manage to make it to the top of a line without attempting to find the documents they need

- I have also observed this phenomenon in airports and banks

- why

- I registered a bunch of people whose papers I have read, and I maintained composure

- if I were running the registration desk with excessive time to spare, we would have had a graph of cumulative registrations over time, maybe with a breakdown for geographic origin/broad affiliation

Overall it was surprisingly fun. Apparently I rather enjoy that kind of work, so if this whole research thing doesn't work out I have a bright future as a vending machine.

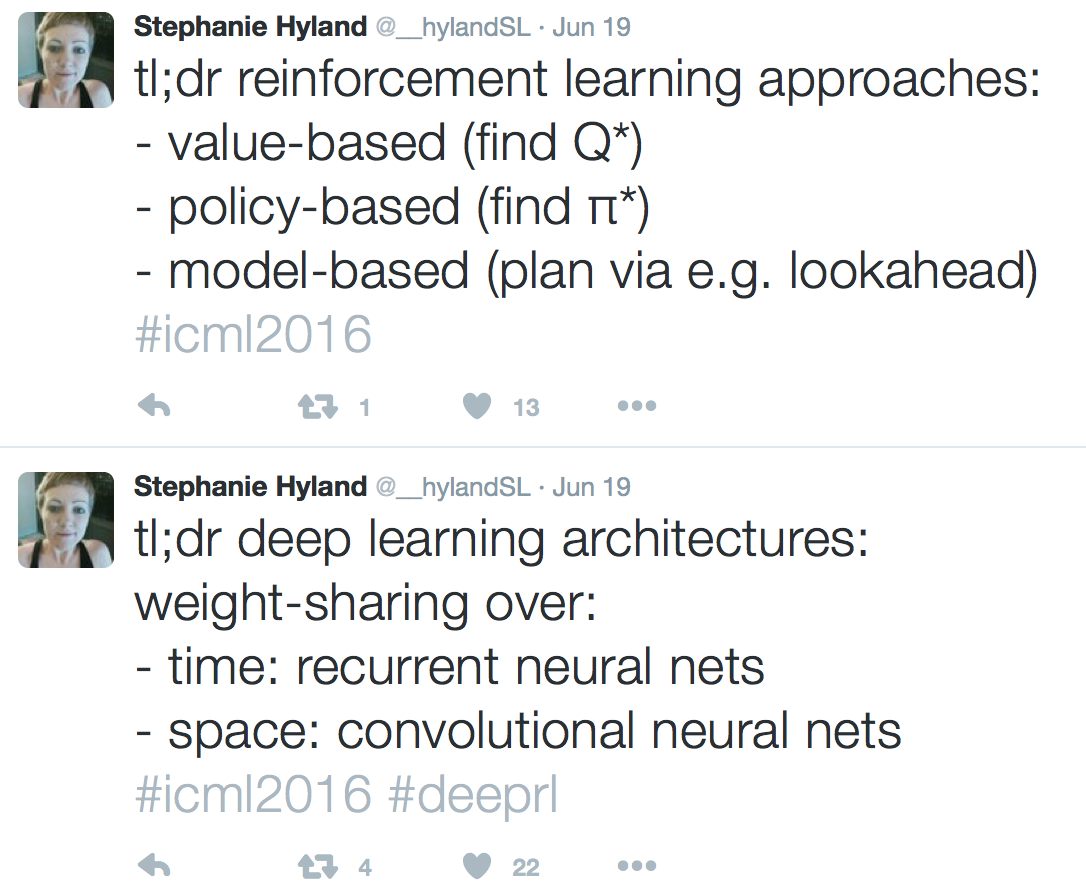

Tutorial on Deep Reinforcement Learning

I was only able to attend one tutorial due to volunteering, and it was Deep RL. It was so popular there were two overflow rooms. Intense community interest in deep RL continues. Here's an abbreviated version:

The deep part comes into play when you use a deep neural network to approximate your value function, policy, environment etc.

Interesting Papers/Talks

These are the papers I flagged in the conference app. Did I attend all of these talks? No. Did I attend all of the posters? Also no. In hopefully-meaningful categories:

Neural Networks

- Learning to Generate with Memory: Chongxuan Li, Jun Zhu, Bo Zhang: a deep generative model with external memory and attention mechanism. The deepness comes in through some nonlinear functions on latent variables which are defined by (deterministic) deep neural networks. Each layer in the network has access to its own external memory, which is seemingly novel in this model. In each layer lower-layer information is combined with the memory to produce the output, using some attention function taking as input the information from the lower layer. I'm not entirely convinced by the experiments that the memory mechanism actually helps that much, although they say it gives better 'qualitative' results.

- Unitary Evolution Recurrent Neural Networks: Martin Arjovsky, Amar Shah, Yoshua Bengio: The idea here is to use a unitary matrix as the evolution operator in an RNN, with a hope to avoid exploding gradients. It seems to result in an RNN which can retain information for longer than a LSTM, and while gradients do vanish slowly, they do so more slowly than other models, and don't explode. I'm working on something of an extension to this work right now, and I had the pleasure of speaking with the authors at length. More details in forthcoming paper, I guess? Or blog post, we'll see.

- Strongly-Typed Recurrent Neural Networks: David Balduzzi, Muhammad Ghifary: I really like the spirit of this work. Let's try to understand RNNs! And take inspiration from functional programming and physics, because why not? The physics part is roughly to preserve 'dimensions' (think units) by preserving the basis of the space. I took issue with this because I think any map from a space to itself is already preserving something (preserving being in the space, that is), but what that means for the model is less clear. The part from functional programming is about separating state and computation, a separation into learnware (with parameters) and firmware (having no parameters, but having state).

- Group Equivariant Convolutional Networks: Taco Cohen, Max Welling: Wild simplification/mild understatement: they extend convolutional layers to other kinds of symmetries, not just translational.

- Training Neural Networks Without Gradients: A Scalable ADMM Approach: Gavin Taylor, Ryan Burmeister, Zheng Xu, Bharat Singh, Ankit Patel, Tom Goldstein: ADMM stands for Alternating Direction Method of Multipliers. They use this with Bregman iteration to train networks without SGD! This method scales linearly over cores, and they compare this to an asynchronous SGD model called Downpour, which scales very strangely. SGD, having many small computations is good for GPUs, whereas CPUs are better for a smaller number of expensive calculations, preferably involving a lot of data. This approach also combats the vanishing gradient problem (unsurprising given there are no gradients to vanish: gradients come pre-vanished), and SGD's tendency towards lingering near saddle-points.

Reinforcement Learning / Bandits

- Opponent Modeling in Deep Reinforcement Learning: He He, Jordan Boyd-Graber, Kevin Kwok, Hal Daume III: They develop a model called DRON: Deep Reinforcement Opponent Network, which is close enough to TRON to make me happy. It's based on Mnih's deep Q-networks. DRON has both policy-learning module and opponent-learning module. It's essentially two networks, and they look at ways of combining them: concatenation and using mixtures-of-experts.

- Why Most Decisions Are Easy in Tetris—And Perhaps in Other Sequential Decision Problems, As Well: Ozgur Simsek, Simon Algorta, Amit Kothiyal: by 'easy' they mean: "one can choose well among the available actions without knowing an evaluation function that scores well in the game". The idea is that comparison becomes easy when some criteria are met, and the relationship between features and criterion (of the comparison) is linear. This linearity requirement seems restrictive, but holds true for the best known tetris player (BCTS).

- Smooth Imitation Learning for Online Sequence Prediction: Hoang Le, Andrew Kang, Yisong Yue, Peter Carr: They're looking at imitation learning where actions and the environment are continuous, but the environment is exogenous (not affected by actions). They consider the state space to be both environment and actions (so the policy considers the previous action taken), and enforce smoothness of actions. The application is smooth camera control (the paper is from Disney research), hence smooth actions. Their approach learns a fully deterministic stationary policy, and they have some other contributions whose gravity are somewhat lost on me, but are presumably important.

- Asynchronous Methods for Deep Reinforcement Learning: Volodymyr Mnih, Adria Puigdomenech Badia, Mehdi Mirza, Alex Graves, Timothy Lillicrap, Tim Harley, David Silver, Koray Kavukcuoglu: As an alternative to experience replay, they asychronously run multiple agents in different instances of the environment, in parallel. This can then be run on a multi-core CPU rather than a GPU, and is more resource efficient. Some nice ggplots, too.

- Conservative Bandits: Yifan Wu, Roshan Shariff, Tor Lattimore, Csaba Szepesvári: a multi-armed bandit problem where a company wants to maximise revenue while keeping revenue above a constant baseline. In this setting there exists a 'conservative default action', and they propose an extension to UCB (upper confidence bound) where a budget is accumulated using the conservative arm, and when large enough allows for 'safe' exploration.

Representation Learning

- The Information Sieve: Greg Ver Steeg, Aram Galstyan: What an intriguing title. This is about representation-learning. The idea seems to be to iteratively 'sieve' the data, extracting a latent feature at a time, then passing on a version of the data with the contribution from that feature somehow removed, and so on. Sieving. It relies on the total correlation, or multivariate mutual information, and they describe a way for finding the factors which cause this total correlation to decompose into non-negative contributions.

- Complex Embeddings for Simple Link Prediction: Théo Trouillon, Johannes Welbl, Sebastian Riedel, Éric Gaussier, Guillaume Bouchard: a scoring function for link prediction (subject, predicate, object type triples) which uses complex-valued embeddings for entities. Using the inner product in complex space amounts to taking dot products with complex conjugates, which handles asymmetry of the triples. The relationships appear to be parametrised with complex-valued vectors. At a glance it looks like a complex version of DistMult.

Other / ???

- ForecastICU: A Prognostic Decision Support System for Timely Prediction of Intensive Care Unit Admission: Jinsung Yoon, Ahmed Alaa, Scott Hu, Mihaela van der Schaar: the application here is predicting when/if a patient needs to be admitted to the ICU. They cast it as an optimal stopping problem, and try to learn the unknown stopping rule of the stochastic process: how the physician decides (on the basis of the stream of data) to admit the patient to ICU. They assume patients belong to 'stable' or 'deteriorating' classes, which describe different distributions over physiological streams.

- Dropout as a Bayesian Approximation: Representing Model Uncertainty in Deep Learning: Yarin Gal, Zoubin Ghahramani: I'm not going to give this paper justice by skim-summarising it, so I'll just quote a sentence: _"In this paper we give a complete theoretical treatment of the link between Gaussian processes and dropout, and develop the tools necessary to represent uncertainty in deep learning". Cool cool cool.

- CryptoNets: Applying Neural Networks to Encrypted Data with High Throughput and Accuracy: Ran Gilad-Bachrach, Nathan Dowlin, Kim Laine, Kristin Lauter, Michael Naehrig, John Wernsing: Homomorphic encryption! Homomorphic encryption only allows for addition and multiplication, and ideally with low-degree polynomials, so they have to approximate the usual max pool, sigmoid etc. transformations. One also has to be careful as all operations in the cryptosystem are applied modulo some number. A key thing to note here is that they're not training on encrypted data, just predicting.

- The Arrow of Time in Multivariate Time Series: Stefan Bauer, Bernhard Schölkopf, Jonas Peters: Non-Gaussian noise breaks time symmetry in multivariate autoregressive moving average (VARMA) models.

Geometry in Machine Learning Workshop

Is the title of this workshop an intentional Lord of the Rings reference? I sure hope so.

I spent the whole day at this workshop, since I was presenting a poster and also yay differential geometry.

So why care about geometry for machine learning? Firstly, by geometry we're talking about differential geometry, which is focused on differentiable manifolds (manifolds which locally look flat). Data usually lies on a manifold. We often assume this manifold is Euclidean space (nice and flat), but it often isn't. A simple example is data which lies on a circle, which if you've encountered if you've ever dealt with angular measurements. Gregory S. Chirikjian gave a really nice illustrating example in his talk "Learning and Lie Groups": if you consider the range of motions available to a simple noisy robot, after a certain number of steps its possible location will be given by some probability distribution (this is called the 'banana distribution'). This distribution is not Gaussian in x and y (the coordinates of the Euclidean manifold a.k.a. the plane the robot was moving on), but if you recall that its motions were constrained to come from a Lie group (specifically the planar special Euclidean group, SE(2), consisting of translations and rotations in the plane), you can define a Gaussian distribution relative to coordinates in that group space (since Lie groups are manifolds), and this distribution describes its location. For more details, see the paper: The Banana Distribution is Gaussian: A Localization Study in Exponential Coordinates.

Reasons to be careful when your data lies on a manifold seem to be:

- doing statistics requires a notion of distance, so you must use the distance on the manifold

- gradient-based optimisation requires, well, gradients, so you must use the gradient on the manifold

This second point is actually highly relevant to the work I was presenting at the workshop, which will become entirely clear once I put the paper on the arXiv.

I think machine learning as a field already cares about manifolds a lot, particularly when it comes to finding low-dimensional subspaces within a dataset. This workshop was however primarily concerned with cases where the (sub-)manifold is already known.

And now, the content: (also, you can get the slides for these talks on the workshop page)

Nicolas Boumal spoke about Optimisation on Manifolds. Here is his PhD thesis on the topic. The take-homes were:

- we have some convergence guarantees for non-convex optimisation on manifolds, see the paper: Global rates of convergence for nonconvex optimisation on manifolds, Boumal, Absil and Cartis.

- he has developed a Matlab toolbox for optimisation on manifolds: Manopt

- free book, Optimization Algorithms on Matrix Manifolds, Absil, Mahony, Sepulchre

Laura Balzano spoke about Subspace Learning by Incremental Gradient Descent on the Grassmannian.

- the Grassmannian is a manifold comprised of all low-dimensional subspaces of a particular ambient space, I believe with a pre-specified dimension (so it could be the space of all lines, for example)

- her focus area is streaming data, where you want to use first-order methods (not enough data to estimate hessians, for example)

- doing SVD where the learned matrices are elements of the Grassmannian (that is, living in a lower-dimensional space), so gradients are on the Grassmannian

- more details probably in this paper: Global Convergence of a Grassmannian Gradient Descent Algorithm for Subspace Estimation, Zhang & Balzano

- also featuring a live demonstration of separating foreground from background in video! using a laptop and a webcam! More here: Online Algorithms for Factorization-Based Structure from Motion - Kennedy, Balzano, Wright, Taylor

Gregory S. Chirikjian spoke about Learning and Lie Groups as I mentioned above:

- paper again; The Banana Distribution is Gaussian: A Localization Study in Exponential Coordinates, Long, Wolfe, Mashner, Chirikjian

- also a book (although not free ;_;): Stochastic Models, Information Theory, and Lie Groups, Chirikjian

Tom Fletcher spoke about Probabilistic Geodesic Models. The motivation is shape analysis (with a medical application in brains), particularly for dimensionality reduction and regression.

- he gave a nice introduction to the idea of shape: basically, geometry of object invariant of position, orientation, size: when you remove these things you are on the SHAPE MANIFOLD

- Kendall's Shape Space: defined in a complex space. The idea here is that multiplication by a complex value is a rotation and scaling in complex space, so if you 'quotient' that out, you get Kendall's Shape Space, a complex projective space. (and amusingly for me, Projective Geometry is a class I used to sneak into)

- back to the idea that statistics requires a notion of distance, he defined for us the fréchet mean, allowing points to be 'averaged' on a manifold, and allowing you to define something that looks like a Gaussian-on-a-manifold...

- but a different one than that proposed by Chirikjian, because: there are many ways to arrive at a Gaussian distribution (as a solutions to heat and diffusion equations, as maximum-entropy distributions, the central limit theorem, maximum likelihood solutions to least squares, etc.) and while these seemingly converge on the much-loved Normal distribution in Euclidean space, this doesn't happen on other manifolds... so we end up having 'normal distributions' that look different depending on which definition we started with... oh dear.

- I think it was at this point that someone voiced the concern that in an arbitrary manifold, the distance metric is locally defined (because it is defined on the tangent space at a point), so the normalisation constant in your Gaussian-on-a-manifold actually depends on the centre of the distribution. The solution to this is to only look at homogeneous manifolds, manifolds whose isometry group acts transitively, so the manifold 'looks the same' everywhere.

- some homgeneous manifolds: spaces with constant curvature, Lie groups, Stiefel manifolds, Grassmiannians, dot dot dot

- Open Access Series of Imaging Studies (OASIS): open access brain (MRI) images!

- then it got into geodesic regression and the manifold of diffeomorphisms, with a shout-out to the Sobolev metric, and a mention of Gaussian processes, thus ensuring my interest was piqued

- generalisation of probabilistic PCA on a Riemannian manifold: Probabilistic Principle Geodesic Analysis, Zhang and Fletcher

- another relevant paper: Geodesic Regression and the Theory of Least Squares on Riemannian Manifolds, Fletcher

Katherine St. John spoke about Dimensionality Reduction on Treespaces, specifically evolutionary trees. Hey, biology! Phylogenetics! The core issue is: you see a set of organisms (their genomes, rather) and want to find the optimal evolutionary tree, out of a very very large set of trees. What to do? Metrics on trees usually look at things like rearrangements ("remember balancing red-black trees?"), distances which are NP-hard to compute. I apparently didn't take many notes during this talk, so have some likely-relevant references:

- The Shape of Phylogenetic Trees (Review Paper), St John

- Characterizing Local Optima for Maximum Parsimony, Urheim, Ford, St. John

Mikhail Belkin spoke about Eigenvectors of Orthogonally Decomposable Functions: Theory and Applications. This was partially lost on me, but what I got was: - we have a well-defined notion of eigenvectors and eigenvalues for matrices, but what of tensors (multilinear forms)? There's no spectral theorem here, the idea of rank is different, 'things are just sort of unpleasant' - focusing on orthogonally-decomposable tensors makes things easier (sort of an analogue of eigen-decomposition) - then the trick is to recover the 'basis' the tensor is orthogonally-decomposable on - he said this was primarily about work with Rademacher and Voss, so this paper is likely the reference: Basis Learning as an Algorithimic Primitive, Belkin, Rademacher, Voss

Finally, Stephen Marsland spoke about Principal Autoparallel Analysis: Data Analysis in Weitzenbock Space. This talk got into discussion of connections (maps between elements of tangent spaces), and their curvature, and torsion. It had the same effect that looking at my copy of Spivak's 'A Comprehensive Introduction to Differential Geometry' has: excitement to (re)learn these things but the vague guilt of indulgence in intellectually stimulating but maybe not so directly applicable mathematics. But so cool. Also the sense of having come so close to getting fibre bundles. One of these days.

- this talk included an entertaining story about the history of Weitzenbocks spaces, Cartan not receiving recognition, and racist messages hidden in books. Forgetting the umlaut in Weitzenböck's name is OK, because he was a racist.

- we usually look at the Levi-Civita connection, which is unique and torsion-free. This one weird non-zero torsion tensor. Mathematicians hate it!

- intuitive explanation of curvature: the amount you've rotated upon returning to your original position

- intuitive explanation of torsion: the amount you've failed to return to your original position, sort of, or, 'how hard it is to stay on the manifold'

- Riemann-Cartan space reduces to: Riemannian if torsion is 0, and Weitzenbock if curvature is 0

- cryptic statement in my notes: 'prior over tangent spaces?'

And that's where my notes end.

The poster session was really good in that I got to speak about my work a lot, but really bad in that it ended before I got to see anyone else's work, or talk much about my work at all. I had so many more things to say! Good thing I have a blog. I'm also working on a manuscript which is very almost ready to go on the arXiv, honestly.

Computational Frameworks for Personalisation Workshop

Mistakes were made. I spent the first quarter of this workshop working the registration desk, and the second quarter standing outside the workshop. The afternoon I spent at Machine Learning in Social Good Applications, which was not a mistake (although I arrived too late to get a t-shirt in my size), as I think I had already seen the work from David Blei's talk present at the New York Academy of Sciences Machine Learning Symposium.

The name of the workshop got truncated to 'Computational Frameworks' on the sign outside, so I got to feel vaguely useful providing disambiguation services while trying to glimpse content.

The content I was most interested in (and managed to catch part of) was Joelle Pineau speaking about Contextual Bandits for Effective Discovery of Personalized Adaptive Treatment Strategies. The focus here is on adaptive protocols, such as adaptive clinical trials or adaptive treatment strategies. In each case, earlier outcomes influence subsequent decisions: it's, you know, adaptive. The computational framework they use is the multi-armed bandit: you have a set of K actions with probabilistic outcomes. You don't know the outcomes or the probabilities, but you have to select actions to maximise some expected utility. This poses the classic exploration-exploitation trade-off so integral to sequential decision making. Once you discover an 'ok' action, do you choose it repeatedly (exploiting it), or do you attempt to find yet better actions, risking stumbling upon inferior outcomes (exploration)? This also raises questions about whether it's possible to explore 'safely', which was the subject of Andreas Krause's keynote at AAAI this year.

Back to exploration-exploitation: In adaptive Bayesian trials, they use Thompson Sampling. This requires having a posterior over models, sampling one and selecting the action with highest expected utility relative to that model. So you act greedily given your belief (exploiting), but your belief is random (exploring). Another approach is to define an upper confidence bound (Auer 2002), where you estimate the confidence of the estimate of the expected utility of an action using how many times the action has been tried, and select arms maximising the estimate + the confidence bound. In this way, you select actions which are either very good, decent and uncertain, or very uncertain. The third example in her slides is BESA: Best Empirical Sampled Average (Baranski, Maillard, Mannor, 2014), which seems to involve subsampling the arm which has more data, then selecting the one with highest expected reward.

The specific application was cancer, specifically trying to minimise tumour volume in mice. They did a pure exploration phase, where mice with induced tumours had random treatments of combinations of two drugs (fluorouracil and imiquimod). They then considered the adaptive problem of selecting treatments given the current tumour size. This makes it a contextual bandit problem. They used Gaussian Processes to model the reward function over the space of continuous contexts (tumour sizes) and arms (discrete treatments). Then, given a specific context, you can select the arm maximising the expected reward, using these earlier-described methods. At this point there's a reference to Durand & Pineau 2015 for the GP extension of BESA but I somehow cannot find it. The idea seems to be to re-estimate the GP using a sub-sample of the data, then using that GP to estimate the maximum expected reward. Preliminary results using the adaptive approach look promising, and they're interested in doing sequential reinforcement learning (rather than bandits) in the future.

Machine Learning In Social Good Applications

I approximately made it to the Disease section of this workshop, which is unfortunate because I would have liked to see Quantifying and Reducing Stereotypes in Word Embeddings, Bolukbasi et al. I'd consider this under the umbrella task of removing unwanted patterns from data, or perhaps more accurately, training a model such that it doesn't pick up on these patterns. See also 'racist algorithms' and this ProPublica piece on Machine Bias. Will there be a conference summary where I don't mention Fairness, Accountability and Transparency in Machine Learning? Probably not.

Anyway, I have an especially strong memory of Barbara Han's talk on Predicting Novel Tick Vectors of Zoonotic Diseases, possibly because it contained many horrifying images of ticks. This work is part a project to use machine learning to predict zoonotic diseases, and also featured a (iirc) undergraduate researcher! The problem is basically: ticks act as disease vectors, but not all of them carry zoonoses. They mined entomological literature (and maybe other sources) to come up with feature sets for ticks, trained a supervised classifier (if I recall they used boosted regression trees), and predicted novel vectors. They also did some feature analysis to understand what differentiates these classes of tick. It turns out that a strong predictor is the number of hosts the tick feeds on. It seems like this could be confounded with the need to feed on a specific host (since that host has to be reservoir of the zoonosis), I asked and they hadn't done a breakdown looking at the specific species. Anyway, a straight-forward machine learning task but an important problem in ecology and epidemiology.

A Rant about the Venue

Times Square is the worst. Times Square is why people hate NYC. Tunnels should be built under Times Square so we never have to look at it. I acknowledge its utility to tourists and I reserve through gritted teeth some respect for their bloody-minded dedication to milling at junctions, drifting absent-mindedly across sidewalks, and stopping suddenly. I just don't enjoy being the person trying to weave between them on my way to lunch, especially when it's summer in NYC and I'm an inappropriately-attired Irishwoman. (We don't do 'direct sunlight' very well.)

I thought of some reasons to locate a conference on Times Square:

- the rest of the world has been destroyed

- Times Square stands alone in the void, a final stand for humanity against the encroaching oblivion

- there is nothing left to do but hold conferences

- there are no other appropriate venues in New York City

- conferences require a density of hotels only offered by Times Square

- holding a conference in what is probably a very expensive hotel is a demonstration of power and status

- for... someone. ML researchers maybe? 😎

The venue itself was interesting because the conference was distributed across multiple floors. This meant lots of using the futuristic elevator system. I was involved in more than one 'what algorithm does this elevator system use' conversation. And hey, here's the chapter of the Sutton Reinforcement Learning book about Elevator Dispatching. I wonder how many interesting methods have been developed to solve simple problems arising in the work environment of engineer/scientist types. I certainly used to think about the optimal road-crossing strategy when I lived in NYC (the problem is slightly interesting because east/west and north/south aren't symmetric due to differing block lengths and crossing times, so always going with the go sign isn't an optimal policy[citation required]).

The negative side-effect of this layout was (to me) a lack of general 'focal point' for the conference, especially since there were various other things going on in the hotel. (Excitingly, on the final day there was an Edward Tufte seminar on the same floor as us.)

TL;DR limit registrations to a number your venue can comfortably accommodate. Turning people away is sad (especially if they are, like me, students who only knew they were going once their workshop submission was accepted), but overcrowding is detrimental to good conferencing.

In Conclusion

Despite missing about half the conference between volunteering, working and being sick, I saw a lot of good work and had some great discussions with people. I'm a bit disappointed there was no proper closing ceremony with summary statistics like at NIPS (unless it was at the party on the Wednesday, which I spent coughing in my hotel room). The multi-track format makes it a little hard to get an overview of the broader field, ad there was a strange lack of closure on the last day. I'd say I'm looking forward to next year, but I think* it's going to be in Sydney, so we'll see about that.

*I don't know why I think this and I can't find any evidence supporting it. I did however learn that ICML also stands for:

- international conference on minority languages

- international congress of medical librarians

- international conference on chronic myeloid leukaemia

- international conference on malignant lymphoma

The more you know.