AAAI 2016 by the day

I started writing this in Phoenix airport, so if the current trend (n=2) continues, I'll start recounting my next conference half-way through, with interesting implications for the latter half of the post. This was my first time attending the Association for the Advancement of Artificial Intelligence Conference (AAAI), so I made sure to spend most of it comparing it to NIPS. I stopped taking notes towards the end, so this coverage is a bit skewed.

Thursday

Shameless plug for a great vegan restaurant in Phoenix: Green. I would have eaten there a lot more if it were a bit closer to the conference centre. I ended up going to Vegan House a few times. A fair runner up in the list of Best Vegan(-ish) Restaurants in Downtown Phoenix (there are two).

Friday

Shortly before dawn, it became cold enough to sleep. I appreciated the vastness of the Arizona sky and the eerie absence of fellow pedestrians as I relocated to my downtown hotel. Coming from Dublin and then New York City, I find empty paths unsettling, especially coupled with wide roads and low buildings. I passed by a man selling paintings of owls from a rucksack, and order was restored.

Friday and Saturday of the conference were tutorial/workshop days (the distinction between these categories is not clear). On Friday morning I went to...

Organ Exchanges: A Success Story of AI in Healthcare: John Dickerson and Tuomas Sandholm

I'd seen John Dickerson speaking at the NIPS 2015 Workshop on Machine Learning in Healthcare (some thoughts on MLHC in my NIPS 2015 post), so I was already somewhat familiar with this work. I think he's a good speaker, so even though this topic is not entirely relevant to me, I figured I'd get something out of the tutorial. This was true to some extent - my attention started to flag at some point into what was essentially a 3.5 hour lecture.

The link to the slides is above and here, so I will just outline the main idea and skip the algorithmic details.

Kidney exchanges: you need a kidney, and a family member/friend/loved one is willing to donate one. Unfortunately, they may not be compatible. The solution is to 'trade' donors with someone else: "I'll give you my mother's kidney for your girlfriend's kidney", or, "I'll give you my mother's kidney so your girlfriend can give her kidney to that other person, and their friend can give me their kidney", and so on. This amounts to finding cycles in a graph (the second example being a 3-cycle), which brings us into the wonderful world of combinatorial optimisation. The exchange actually requires everyone to go under the knife at the same time (something about trading organs I don't quite recall), so there are physical and logistical limits on the length of the cycle.

They mentioned some other barter-exchange markets, such as

- holiday homes (intervac)

- books (paperback swap, book crossing)

- odd shoes (national (US) odd shoe exchange)

These are neat. People exchanging used items instead of buying new/throwing away is obviously great, and I approve of anyone supporting such efforts. It's what the 'sharing economy' should have been... and now back to organs.

An interesting (and amazing!) thing can happen in these kidney exchanges: sometimes an altruistic donor will show up; someone who just has too many kidneys and wants to help out. These produce 'never-ending altruistic donor' chains ("a gift that gives forever"), and have apparently become more important than cycles for the kidney-matching problem.

I zoned out of the tutorial for a bit to discuss the feasibility of simultaneous translation, prompted by this article: The Language Barrier is About to Fall. My gut reaction is to say 'it's too hard', but that's motivated by my enjoyment of learning languages - part of me (selfishly) doesn't want this problem solved. I'm however learning to temper my skepticism when it comes to what machine learning can achieve, and we're actually getting pretty good at translation (for some language pairs) so I'm pretty optimistic about this. And breaking language barriers, if it can be done cheaply, could be immense. I emphasize the relevance of cost because I see language most prohibitive not for holiday-goers but for migrants, who may not have the resources to buy a babelfish.

There are a lot of subtleties to consider in the kidney exchange problem, and much work has been done: see the slides.

They concluded the tutorial with a discussion of other organ exchanges. Kidneys are sort of 'easy' because the cost to the donor is quite minimal, unlike in e.g. lung exchanges where the donor's quality of life (and life expectancy) are impacted. One can also do living donor liver exchanges, where some fraction of the donor's liver is removed. There are essentially no altruistic donors here. Dickerson suggested combining multiple organs, so you thread a liver and kidney chain together. Perhaps a kidney patient's donor would be willing to donate liver to someone whose donor would give a kidney, and so on.

My plan was to go to AI Planning and Scheduling for Real-World Applications (Steve Chien and Daniele Magazzeni) in the afternoon, but I made the mistake of being outside for slightly too long during lunch, and I spent the rest of the afternoon recovering in a dark and cool hotel room. Irish people: handle with care, keep out of direct sunlight.

Student Welcome Reception

One really nice thing about AAAI was the student activities. Being a student at a conference can be bewildering: there are so many people who seem to know each other, talking about things they seem to know about! I was also there by myself (my group does not typically attend AAAI), so the icebreakers they ran saved me from spending the rest of the conference lurking in corners and hissing at people.

The actual ice-breaker activity was weird (although seemingly effective): we had to take photographs with a AI/AAAI/Phoenix theme (artificially intelligent fire, maybe) featuring ourselves. A ploy to get pictures for a website? Possibly. We never did find out who won the fabled prize.

Saturday

Excluding a brief foray into the tutorial about 'Learning and Inference in Structured Prediction Models', and fruitless wandering in search of coffee shops open on a Sunday, I spent much of the day at...

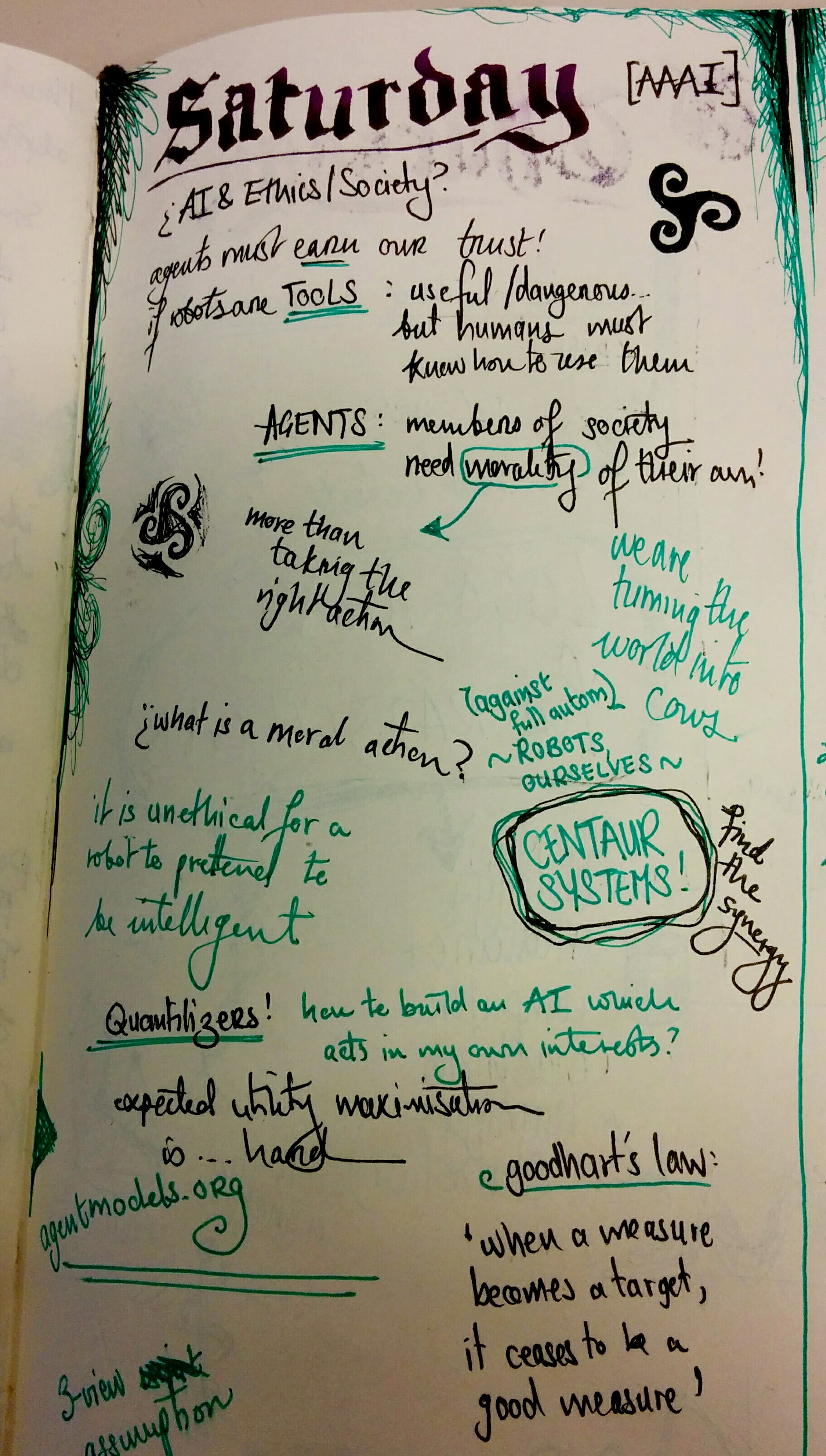

Workshop on AI, Ethics, and Society

This workshop had overlap in content/speakers/organisers with the 'Algorithms Among Us' symposium at NIPS 2015 (some thoughts here). My interests might be obvious by now.

This was an interesting workshop. There was a mix of machine learners, AI researchers, (possibly) philosophers and miscellaneous other. There were fewer arguments than I would have expected. It's not that that I particularly wanted to see (verbal) fighting, but people seem quite passionate about, e.g. whether or not The Singularity is something to worry about, so I expected more gloves on the floor.

People are concerned about dangerous (powerful) AIs - how do we ensure they don't enslave us all in pursuit of paperclip-making? Do we have moral responsibility towards them? Should they feel pain? Should we be allowed to turn them off, once they're active/alive(?)? Are simulations of humans humans? These were some questions raised.

Some more, uhh, short-term concerns included the risks of adversarial machine learning, the effects of AI on labour markets (more on this later), the difficulty of measuring progress towards AGI, and enough other things that I didn't leave the workshop thinking everyone is feeling Existentially Threatened. I certainly am not.

I'm glad some people are thinking about long term threats (diversity of tactics!), but I am much more worried about the present and near future. AI (rather machine learning) already influences people, in potentially irreversibly life-altering ways (to put it mildly), and I fear the technology is becoming integrated into society faster than anyone can measure its harm (see also: vaping). It's also quite easy for us as researchers to pretend our work is apolitical, that we simply explore and create things, blissfully ignorant of negative consequences should our creations be misused. Positive applications presumably motivate much great work, and I don't wish that people stop this work, necessarily. We just need to acknowledge that we cannot un-discover things, and that people who don't understand the limitations of technology may still use it.

I am meandering to a point: efforts such as the Campaign to Stop Killer Robots are good and should be publicised and discussed. Perhaps the Union of Concerned Scientists should start thinking about 'algorithmic/autonomous threats' (to human lives, livelihoods and the environment). My ideas here are half-formed, which is all the more reason I'd like to see discussions about such issues at similar workshops. It's certainly important that AIs have ethics, but what about the ethics of AI researchers?

Sunday

The conference begins in earnest!

Steps Toward Robust Artificial Intelligence - Thomas G. Dietterich

Quantifying our uncertainty (as probabilistic approaches to AI attempt to do) is about known unknowns: rather, the thing we know we are uncertain about has to appear somewhere in the model. Dietterich drew attention to unknown unknowns: things outside the model, perhaps outside our algorithm's model of the environment.

One way to tackle this is to expand the model: keep adding terms to account for things we just thought of. A risk of this is that these terms may introduce errors if we mismodel them. He suggested that we instead build causal models, because causal relations are more likely to be robust, require less data and transfer to new situations more easily.

Regarding new situations: what happens if at 'test' (deployment, perhaps) time, our algorithm encounters something wildly different to what it has seen before? Perhaps instead of allowing it to perform suboptimally (and worse still, to not know it is performing badly), it should recognise this anomaly and seek assistance. This prompts an open question, "when an agent decides it has entered an anomalous state, what should it do? Is there a general theory of safety?"

Session: Learning Preferences and Behaviour

I'll not lie: I went to this session because it sounded creepy in a Skynet, Minority Report sort of way.

My favourite talk of the session was Learning the Preferences of Ignorant, Inconsistent Agents - Owain Evans, Andreas Stuhlmueller and Noah D. Goodman. Roughly, they are concerned with inverse reinforcement learning (IRL) (so learning utility/reward functions) from suboptimal agents, as humans often might be. A specific case they look at is time inconsistency, which is where agents make plans they later abandon. Seemingly any non-exponential discounting implies time-inconsistency, if my notes are correct. See paper for details. And a related project page: agentmodels.org

I spent the early afternoon finishing up my 'plain English explanation' for the work I was presenting at AAAI, see the page here. I wanted to have something to point my family/friends at when they ask what I work on. Also, making science accessible is good, probably.

Session: Word/Phrase Embedding

I went to this because I was speaking (briefly) at it. Also, because it is relevant to my interests, so I'll list everything.

The oral spotlights:

- Inside Out: Two Jointly Predictive Models for Word Representations and Phrase Representations - Fei Sun, Jiafeng Guo, Yanyan Lan, Jun Xu and Xueqi Cheng: Modification of the word2vec-style skip-gram/continuous-bag-of-words model including morphology, project page: InsideOut.

- Minimally-Constrained Multilingual Embeddings via Artificial Code-Switching - Michael Wick, Pallika Kanani and Adam Pocock: using artificial code-switching to help rapidly create multilingual tools, borrowing information across languages essentially.

- Generalised Brown Clustering and Roll-Up Feature Generation - Leon Derczynski and Sean Chester: I am shamefully ignorant about Brown clustering, so a lot of this was lost on me. Link to project repository, anyway.

The poster spotlights:

- Building Earth Mover's Distance on Bilingual Word Embeddings for Machine Translation - Meng Zhang, Yang Liu, Huanbo Luan, Maosong Sun, Tatsuya Izuha and Jie Hao: I may have spent this spotlight worrying about my spotlight.

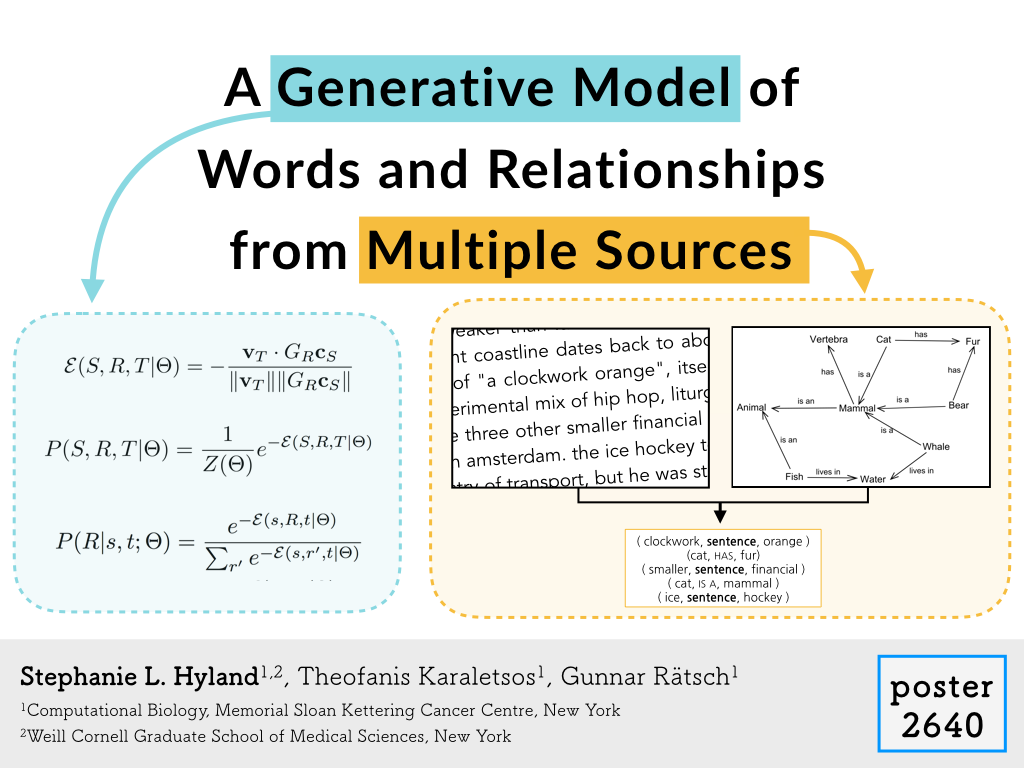

- A Generative Model of Words and Relationships from Multiple Sources - Stephanie L. Hyland (that's me) , Theofanis Karaletsos and Gunnar Rätsch: People seemed to like the slides I made for this spotlight, so I put them in the project repository with some other 'media', see here.

- Single or Multiple? Combining Word Representations Independently Learned from Text and WordNet - Josu Goikoetxea, Eneko Agirre and Aitor Soroa: work in a similar vein to mine, in the sense of combining information from 'free text' and 'structured data' (in this case WordNet).

From Proteins to Robots: Learning to Optimize with Confidence - Andreas Krause

Some interesting and important questions:

- how can an AI system autonomously explore while guaranteeing safety?

- how can we do optimised information gathering?

The former question is quite important for 'learning in the wild', and moving beyond the existing (rather successful) paradigm of test/train/validation that we have in machine learning - what happens when the data the algorithm sees depends on actions it takes?

The latter is quite interesting for cases where we want to probe some nearly-black-box system, but probing is expensive. One can use the framework of Bayesian Optimisation (Močkus, 1978), and score possible locations (to probe) by their utility in resolving the exploration/exploitation trade-off (via some kind of acquisition function, of which many have been proposed).

He discussed how one can use Gaussian processes and confidence bounds to help with this, and I'll include a pointer to Srinivas et al, 2010.

Some more paper pointers:

- Stochastic Linear Optimization under Bandit Feedback - Varsha Dani, Thomas P. Hayes, Sham M. Kakade

- Active Learning for Level Set Estimation - Alkis Gotovos, Nathalie Casati, Gregory Hitz, Andreas Krause

- Safe Exploration for Optimization with Gaussian Processes - Yanan Sui, Alkis Gotovos, Joel Burdick, Andreas Krause

- Contextual Gaussian Process Bandit Optimization - Andreas Krause, Cheng Soon Ong

(I am quite fond of Gaussian processes, in case that wasn't already obvious.)

The conclusions were:

- feedback loops abound in modern ML applications

- exploration is central but also delicate, and safety is crucial

- statistical confidence bounds allow navigating exploration-exploitation tradeoffs in a principled manner

Poster Session 1

I was presenting at this session (see my poster here), so I didn't get to look at anything else. I struggled to eat bean tacos one-handed, and I talked a lot.

Monday

Learning Treatment Policies in Mobile Health - Susan Murphy

I have Susan Murphy's paper Optimal dynamic treatment regimes on my desk as I write this, so I was pretty excited to see her speaking. And on mHealth, too! Double excitement.

It turns out that she is also involved in the Heart Steps project with Ambuj Tewari, which I wrote about a little in my NIPS post, so I'm not going to repeat myself.

The 'treatment optimisation' aspects of mHealth are interesting because it gets into the realm of HCI and psychology. You want to send the patient reminders to do a thing, but you don't want them to become habituated and ignore them, or irritated, or distracted. She mentioned the need to avoid pointlessly reminding the patient to go for a walk while they're already walking, or dangerously alerting them while they're driving. I find it uncomfortable to be reminded that my phone knows when I'm walking/driving, but if the information is being recorded anyway, you might as well use it, right? Insert something about dragnets here.

But really, mHealth provides some very exciting opportunities to do reinforcement learning. She mentioned non-stationarity as a general challenge, and suggested one could perhaps do transfer learning within a user to tackle it.

Session: Active Learning

A POMDP Formulation of Proactive Learning (Kyle Hollins Wray and Shlomo Zilberstein) was interesting. The idea is that the agent must decide which oracle to query to label a particular data point, where the underlying state is the correctness of the current set of labels. I'm not familiar enough with the active learning field to say if this formulation is especially novel, but I liked it, possibly because I like POMDPs.

Session: Privacy

I experimented with taking no notes during this session to see how it would influence my recall of the material. The trade-off here is that taking notes is a little distracting for me (as well as providing many opportunities to notice Slack/email/etc.), but does provide a lasting record.

Logical Foundations of Privacy-Preserving Publishing of Linked Data (Bernardo Cuenca Grau and Egor V. Kostylev) was strangely fascinating. They were talking about anonymisations of RDF graphs (a data type I'd been working with for my word embedding work). I'm also quite interested in information linkage (see e.g. my talk at Radical Networks 2015), so this was up my alley.

Not sure how the experiment worked out, further data required.

Session: Cognitive Systems

I was heavily overbooked for this time-slot: I wanted to see Deep Learning 1, Discourse and Question Answering (NLP 6), the RSS talks (for my friend Ozan's talk), Cognitive Systems (largely for Kazjon's talk - see below), and Machine Learning/Data Mining in Healthcare. Time turners have yet to be invented, unfortunately.

One of the recurring themes of my AAAI v. NIPS pronouncements was that AAAI has, well... more AI stuff. This session was probably the closest I got to that (unless you count the AI and Ethics workshop: I'd consider it meta-AI). People were doing reasoning without probability distributions, using first order logic! One of the presentations included this video which I found strangely distressing (to me it is - spoilers! - clearly about domestic abuse).

The talk I had come to see, Surprise-Triggered Reformulation of Design Goals (Kazjon Grace and Mary Lou Maher), along with numerous chats with Kazjon throughout the conference made me realise that computational creativity is a thing. OK, full disclosure: I am loosely involved with some generative art folks so I did sort of know this, but it hadn't occurred to me that one might use machine learning to represent or understand mental processes surrounding creativity. Neat! The idea here is that the way humans design things is iterative: you have some loosely-formed idea, and through the process of realising it, notice things you hadn't expected (experience surprise, as it were), and modify your idea accordingly. So there is interplay between the internal representation (perhaps this is the design goal) and the external representation (the realisation). So they're interested in understanding surprises: perhaps an element of a design is unusual given other elements of the design, for example. I am going to have to actually read the paper before I elaborate any further on this, but the experiments involved generating weird (but edible) recipes so I'm looking forward to it.

Very deep question raised by all of this: "can computers be creative?"

Related: what is creativity? What is art? What are computers?

AI's Impact on Labor Markets - Nick Bostrom, Erik Brynjolfsoon, Oren Etzioni, Moshe Vardi

I managed to take no notes during this panel (my notes from AAAI actually dry up around here, I hit peak exasperation with keeping my devices charged).

I have a lot of feelings about AI and labour, but I'm first going to direct attention to the Panel on Near-term Issues from the NIPS Algorithms Among Us Symposium, which had a similar lineup.

Ultimately, it is hard to solve social and political issues using technology alone, especially if those issues arise as a result of the technology itself. I'd love to automate away all the mind-numbingly boring and unfulfilling jobs humans currently do, but I don't want to remove anyone's livelihood in the process. I don't think it's sufficient to say that society will 'figure it out somehow', especially in countries such as the USA where there is so little protection from poverty and homelessness. That said, I don't know what the solution is (except for some rather radical ideas with limited empirical support for their efficacy), and I don't know if it will, or should, come from the AI research community.

Poster Session 2

I got slightly side-tracked by ranting about how broken academic publishing is. Shoutout to the Mozilla Contributorship Badges project for trying to deal with the credit-assignment problem, for one.

Tuesday

Towards Artificial General Intelligence - Demis Hassabis

Google DeepMind are arguably the machine learning success story of the last year, given their Atari Nature paper and AlphaGo result (although the match against Lee Sedol in March will be more interesting). I'm very happy to see computer games featuring so prominently for evaluating and developing AGI: so much that I spent the session after this talk sketching out a project involving Dota 2, which I think could be a very interesting application of deep reinforcement learning, if only the metagame would stabilise long enough to allow for acquisition of sufficient training data!

Anyway, this talk mostly convinced me that DeepMind are doing cool stuff, which I imagine was the intended effect. Hassabis was coming from a pleasantly principled place. They do seem genuinely interested in AGI, rather than for example, beating benchmarks with yet deeper networks. I don't mean to imply that beating benchmarks isn't important, but I think the types of discoveries one makes in the pursuit of larger/more abstract goals are quite important for the intellectual development of a field which can easily become dominated by engineering successes. So yes, the talk had the intended effect.

Session: Reinforcement Learning I

Distance Minimization for Reward Learning from Scored Trajectories - Benjamin Burchfiel, Carlo Tomasi and Ronald Parr: this is about IRL with suboptimal experts (a popular and interesting topic). In this case, the 'demonstrator' need not be an expert but can operate as a judge, assigning scores to demonstrators. The real-world example would be of a sports coach who's no longer capable of creating expert trajectories (that is, demonstrating optimally) but who can still accurately rate demonstrations from others, if they're available. They also study the case where the judge's scores are noisy and find the algorithm robust.

Inverse Reinforcement Learning through Policy Gradient Minimization - Matteo Pirotta and Marcello Restelli: more IRL through parametrising the expert's reward function, but here it is no longer necessarily to repeatedly compute optimal policies, so this should be quite efficient. Also, this algorithm is called GIRL.

Poster Session 3

Some interesting posters (highly non-exhaustive list, but I'm exhausted):

- Predicting ICU Mortality Risk by Grouping Temporal Trends from a Multivariate Panel of Physiologic Measurements - Yuan Luo, Yu Xin, Rohit Joshi, Leo Celi and Peter Szolovits

- Reinforcement Learning with Parameterized Actions - Warwick Masson, Pravesh Ranchod and George Konidaris

- Siamese Recurrent Architectures for Learning Sentence Similarity - Jonas Mueller and Aditya Thyagarajan

Wednesday

Sessions: Reinforcement Learning II & III

-

Model-Free Preference-Based Reinforcement Learning - Christian Wirth, Johannes Fürnkranz and Gerhard Neumann: I didn't actually see this talk, but the paper has a number of interesting words in its title, so it must be good.

-

Increasing the Action Gap: New Operators for Reinforcement Learning - Marc G. Bellemare, Georg Ostrovski, Arthur Guez, Philip S. Thomas and Remi Munos: this was a good talk. Basically, during value iteration one applies the Bellman operator to the state-action value function (Q-function). The fixed point of the operator is the optimal Q-function, which induces (greedily) the optional policy. They argue that this operator is inconsistent, in that it suggests nonstationary policies. They resolve this by definining a 'consistent Bellman operator' which preserves local stationarity and show that it increases the action gap (the value difference between the best and second best actions). The action gap is relevant because it can allow for selecting the same (optimal) action even when estimates of the value function are noisy. And a link to the Arcade Learning Environment.

- Deep Reinforcement Learning with Double Q-Learning - Hado van Hasselt, Arthur Guez and David Silver: more from DeepMind. I swear I am not a DeepMind fangirl. Setup here: Q-learning can result in overestimates for some action values. Using DQN (deep Q-learning algorithm) they find that this happens often and impacts performance. They solve the problem by showing how to generalise Double Q-learning to arbitrary function approximation (rather than just tabular Q functions). So this paper seems like a natural progression for Double Q-learning.

Conclusion

Exploration-exploitation trade-offs are everywhere. At this stage in my career, I consider going to conferences a largely exploratory activity: I can learn a little (or more) about a lot of things and get an idea for the kinds of research going on. For the people who spend conferences meeting with their collaborators, it's more about exploitation. (For the appropriate interpretation of that word.) I am a little fatigued of exploration right now - I'm still processing things I saw at NIPS, so I was not well positioned to make the most out of AAAI. I kept wanting to run off and write code in a corner, but who does that? Well, I do that. I do that right now.